About Me

I am an Applied Scientist and Tech Lead at Amazon, conducting research at the intersection of Personalization, Recommendation, and Large Language Models. My work bridges natural language understanding, information retrieval, and foundation model fine-tuning with generative recommendation, grounded in extensive experience building end-to-end scalable ML systems for real-world applications across Ads, Search, and Recommendation systems at global scale.

My current research interests center on Large Language Models and their integration into retrieval-augmented generation (RAG) and agentic systems. Building on my experience deploying large-scale industrial ML products, I am particularly interested in how foundation models can be adapted to reason over structured and unstructured knowledge, enable grounded personalization, and support multi-agent collaboration, advancing both the science and practice of information retrieval and human-AI interaction.

Experience

- Applied Scientist, Amazon Prime Video, 2024 - present

- Applied Scientist, Amazon Advertising, 2021 - 2024

- PhD Researcher, University of Florida, 2018 - 2020

News

- 2023-04: Our paper was accepted to SIGIR 2023!

- 2022-11: Our paper was accepted to HCII 2022!

Publications

-

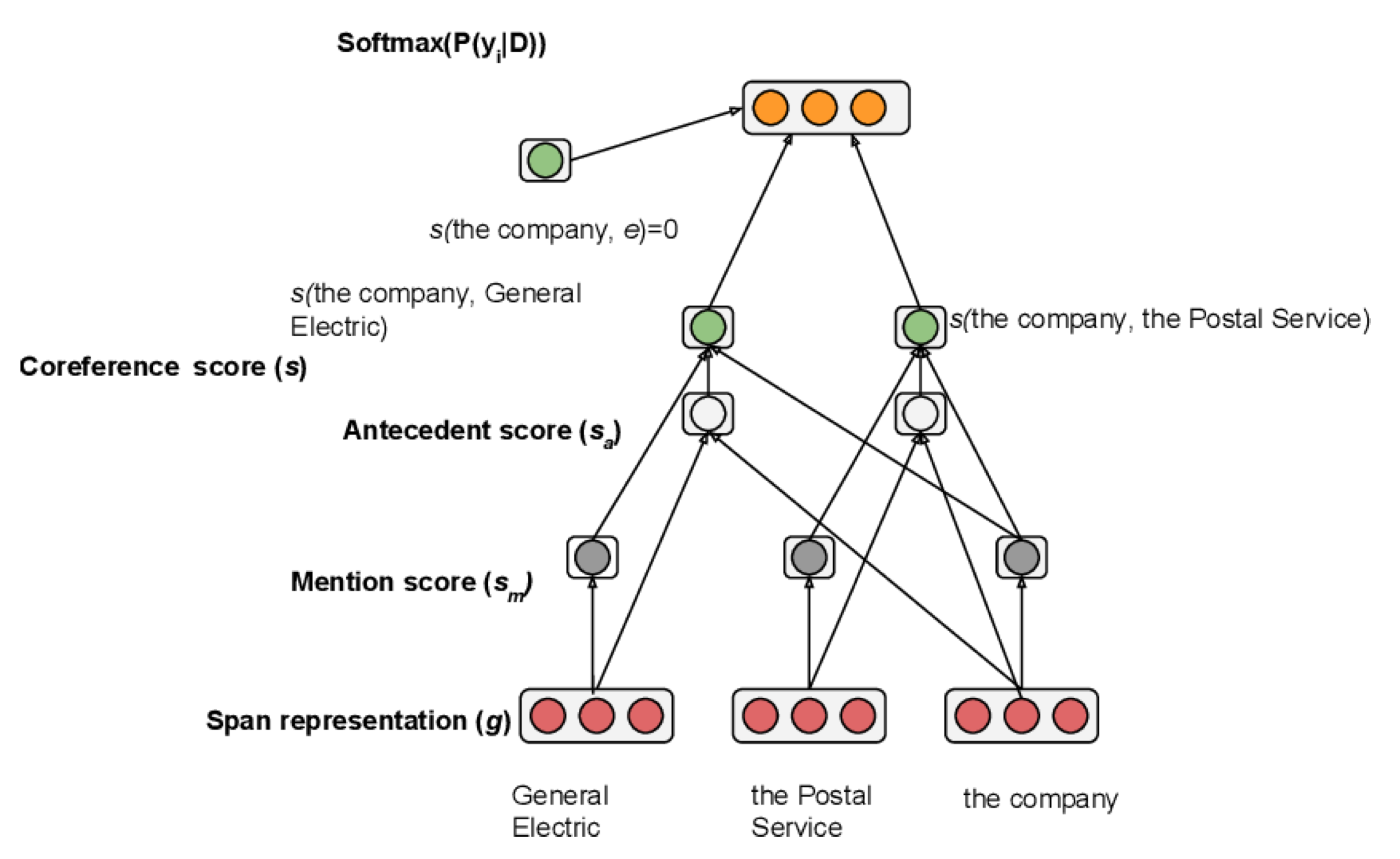

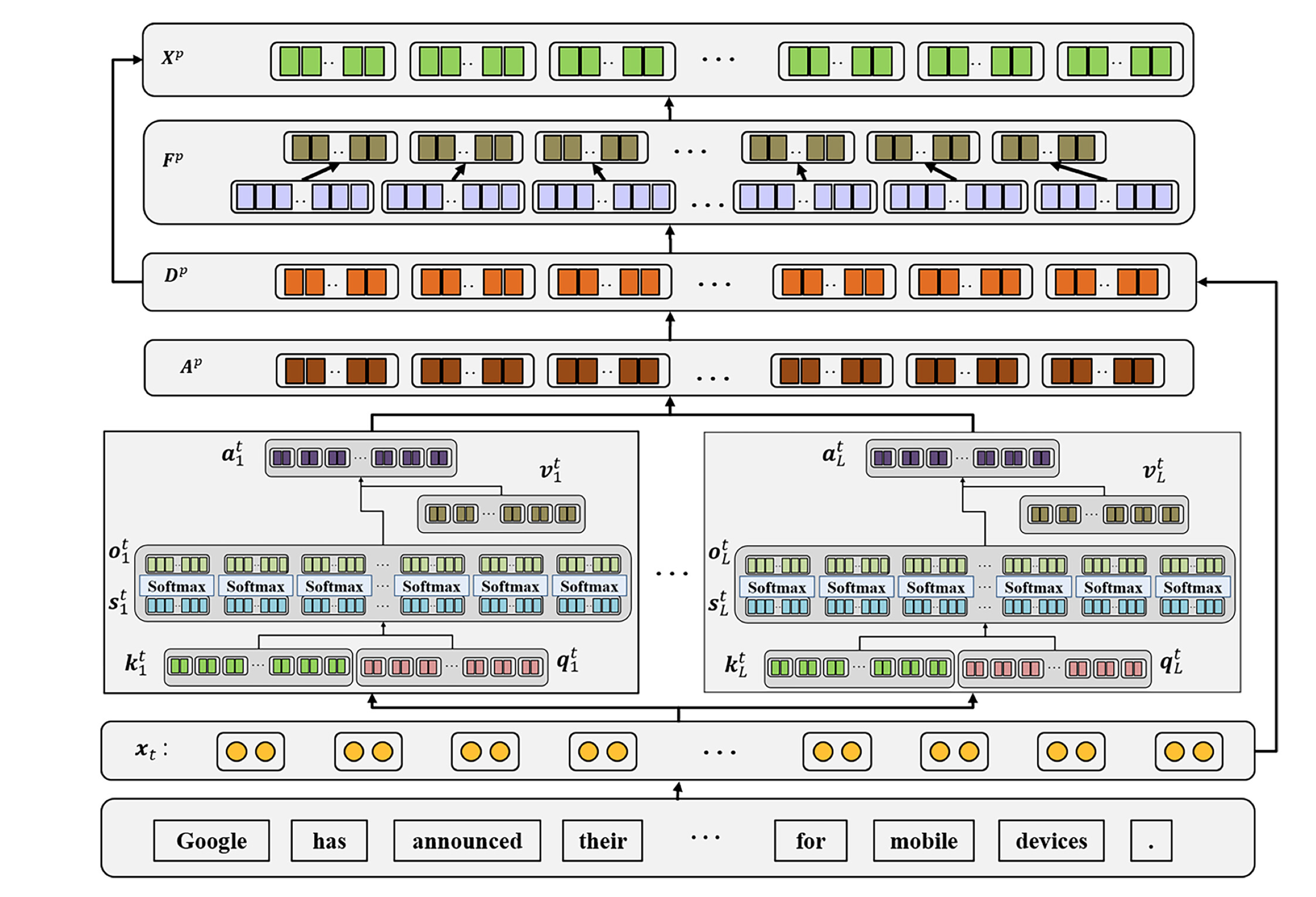

SIGIRProceedings of the 46nd International ACM SIGIR Conference (SIGIR), 2023.

SIGIRProceedings of the 46nd International ACM SIGIR Conference (SIGIR), 2023. -

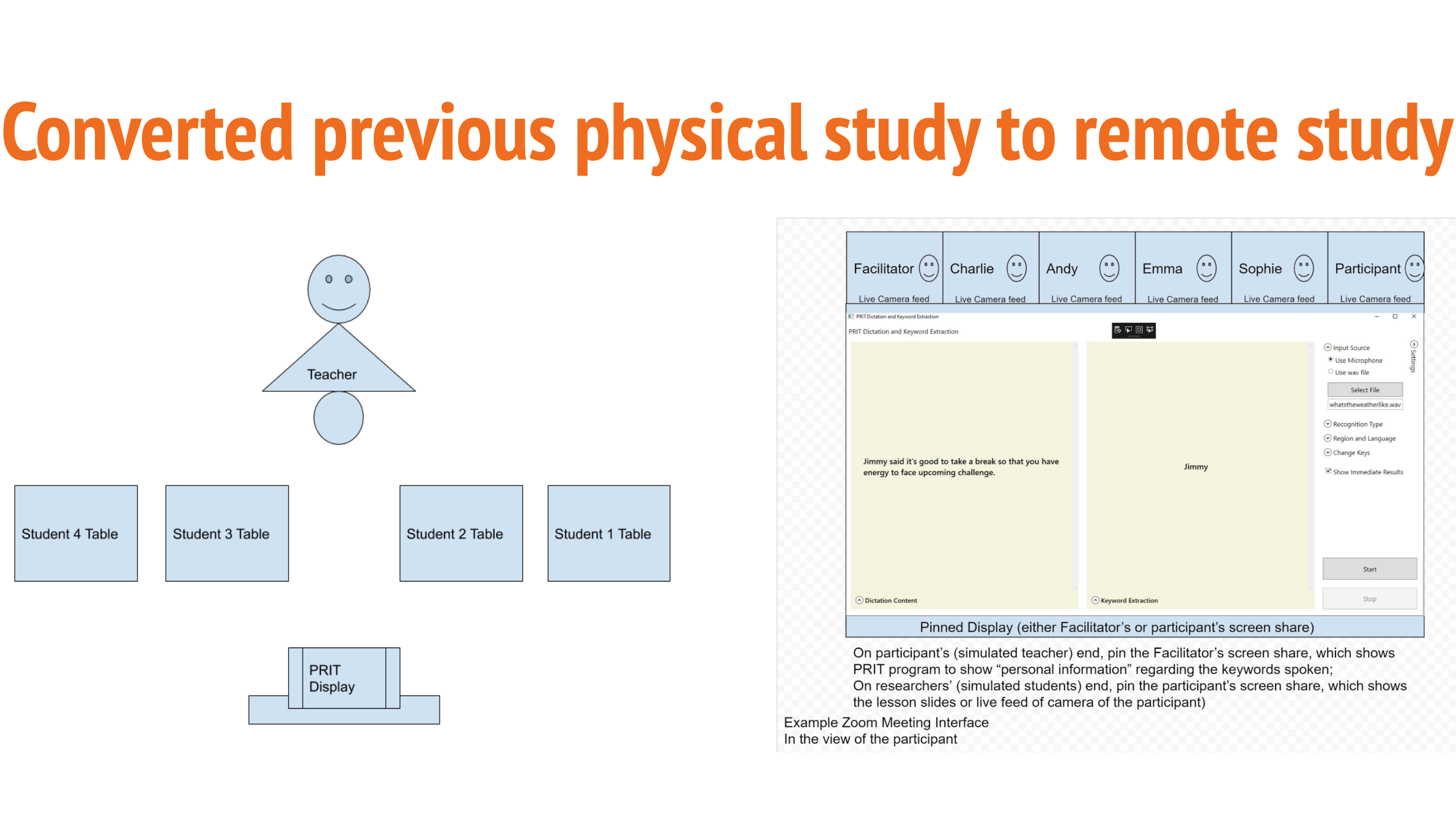

HCIIInternational Conference on Human-Computer Interaction (HCII), 2022.

HCIIInternational Conference on Human-Computer Interaction (HCII), 2022. -

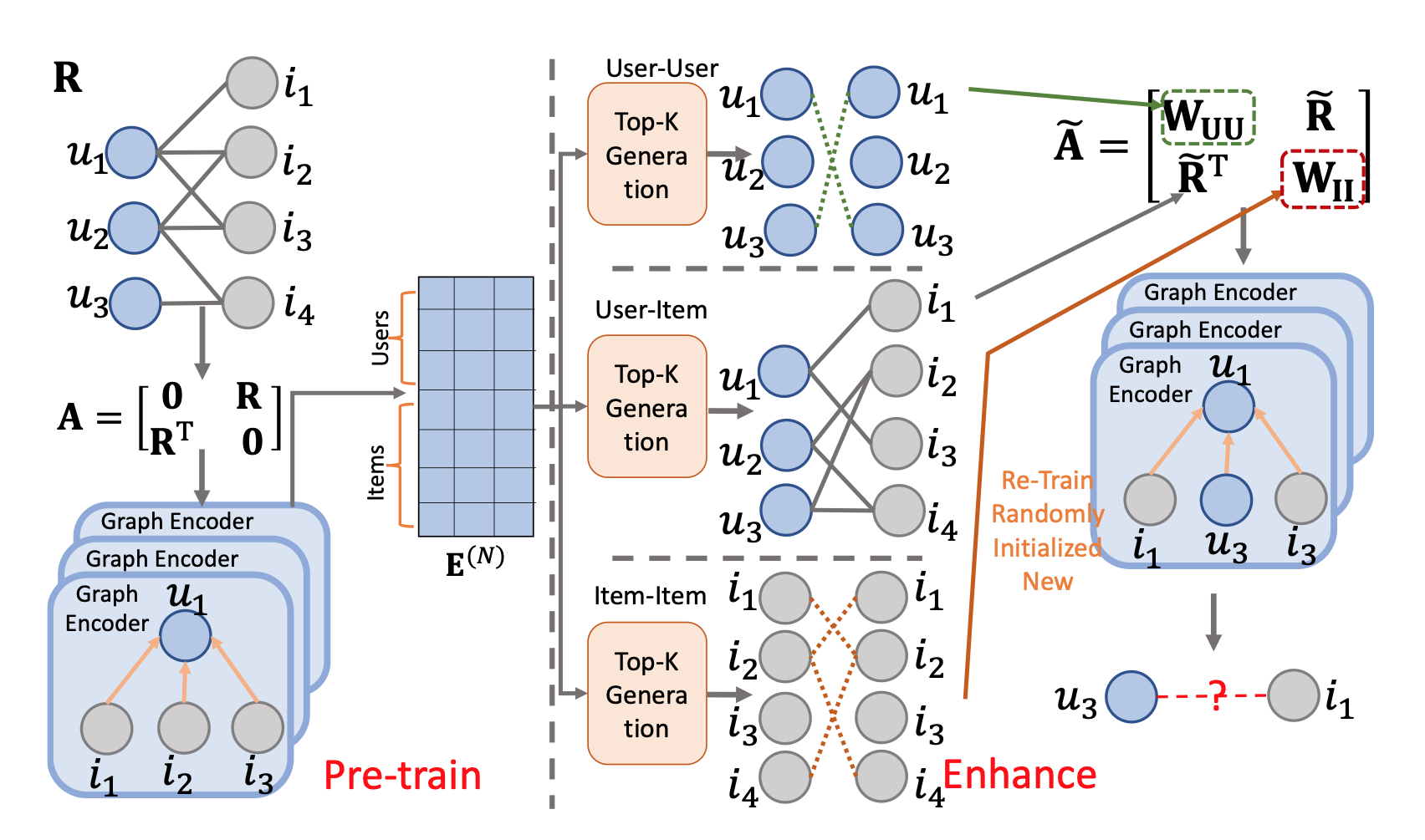

Preprint

Preprint

Services

- Reviewer @ NeurIPS, CVPR, SIGIR, COLING

Powered by Hugo with AcademiaLight